You might be here because you work with data that rarely arrives clean, structured or thoughtfully named. It shows up with inconsistent types, broken schemas and quiet edge cases that only reveal themselves after the fifth meeting with stakeholders.

That’s the block of stone we’re here to sculpt.

What Is Data Sculpting?

Data sculpting is the discipline of shaping dirty, real-world data into something meaningful and reusable after it’s been collected. It’s what happens between ingestion and interface, turning raw data into something people can use with confidence.

This work is an iterative craft. You shape, test, step back and reshape. You work in layers. You expose what matters and remove what doesn’t. You refine structure slowly and intentionally, often in SQL or Python. You make choices, what to name something, where to break a transformation, what to hide from the end user. These choices aren’t always technical. Often, they’re about how well you understand the business, the data producers and the downstream consumers.

Data sculpting accepts the reality that most data systems are built around ingestion, not understanding. That transformation is often the first time anyone really looks at the data.

You don’t need to believe in the perfect data model to do this well. You don’t need to memorize every data contract spec or metric layer tool. But you do need a mindset: that data should be made structured and meaningful.

There’s no “right way” to sculpt data, but there are better habits. The work starts with whatever’s in front of you, a CSV dump, a table, a warehouse with zero docs, and a question: what is this supposed to mean?

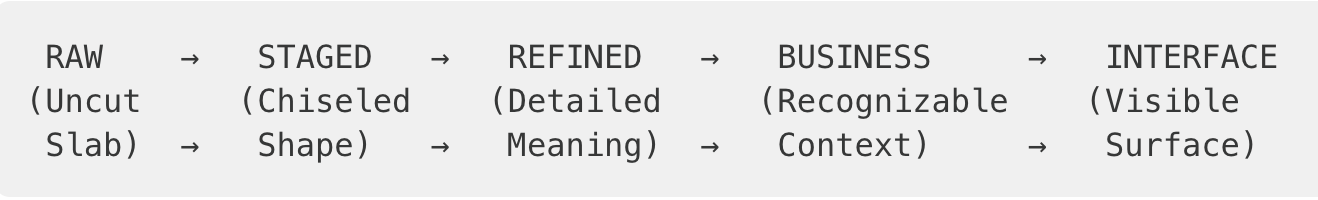

Like sculpting a statue, you start with mass. Then you chisel. Then you refine. Then you reveal the form. Then you present it.

Raw is the slab

a CSV, a JSON blob

Staged is where you carve out shape

correct types, isolate entities, clean up junk

Refined is when the structure gets clear

joins, keys, logic, meaning start to emerge

Business is the form others recognize

revenue, customers, orders, domains

Interface is what people touch

dashboards, semantic layers, models with labels

Each step reveals intent.

This is the sculptor’s pipeline. You shape until the meaning holds its form.

Why Data Sculpting Exists?

Data sculpting emerges out of the gap between how data is supposed to work in theory and how it actually shows up in practice.

In traditional data modeling theory, especially relational modeling, structure comes first. You design tables, define keys and constraints, normalize relationships and ensure that the storage layer reflects a well-understood domain. This approach, formalized by Edgar F. Codd in the 1970s and expanded through data warehouse methodologies in the 1990s (Inmon, Kimball), assumes a world where structure is stable and data sources are known.

In the 2010s, the “big data” movement, driven by cloud storage, event streaming, microservices and distributed systems shifted how data was handled. Instead of structure-first, the new model was schema-later. Collect everything. Dump it into a lake. Store first, figure it out later.

This led to flexibility and scale, but also fragmentation. The idea of a single canonical model became harder to enforce. Data was versioned and reshaped downstream. The same entity might appear in three systems with five definitions. Semantics became implied, not guaranteed. The infrastructure was powerful, but meaning was lost.

That’s the context for data sculpting.

It’s a practical discipline for working with data after it’s landed. Instead of hoping that raw data arrives in perfect shape, data sculpting accepts that the meaning is carved, not inherited.

It also borrows from adjacent disciplines:

From analytics engineering, it takes versioned, testable transformation layers

From software engineering, it adopts ideas of refactoring, modularity and naming as design

From data governance, it respects that semantics need documentation

From human-centered design, it recognizes that data is consumed by people and people need the surface to make sense

Sculpting isn’t new. It’s what analysts and data engineers have quietly done for years.

The goal is to make sure the data can actually support decisions.

Structure is essential. This is how you create it after the fact.

Doing that well is a craft. One worth learning properly.

Theoretical Foundations of Data Sculpting

The foundations trace back through decades of theory in data modeling, information theory and semiotics. Understanding these roots shows how this is a grounded evolution of classical modeling.

Data Modeling as Communication

At its core, data modeling is a semantic activity. It encodes meaning into structure so that data can be understood, reused and reasoned about by others. This aligns with the view of modeling as a form of communication, first formalized in Chen’s Entity-Relationship Model (1976), where entities, attributes and relationships represent conceptual understanding of a domain.

In semiotic terms, a data model is a sign system. It maps signifiers (column names, table structures, codes) to signified concepts (real-world entities, states, events). When a model is well-structured, the interpretation of data becomes stable across time and users. When it is poorly structured or missing altogether, the same dataset may yield conflicting meanings depending on the reader’s assumptions.

Data sculpting operates within this space. It is the act of resolving ambiguity after the fact, examining raw data to infer what it likely represents and then encoding that meaning into structure, naming and documentation. In this way, sculpting becomes a reactive form of semantic modeling, still grounded in the need to communicate shared meaning, but working in reverse from the classical approach.

“The problem of communication is that of reproducing at one point either exactly or approximately a message selected at another point.”

— Claude Shannon, A Mathematical Theory of Communication (1948)

Sculpting is Shannon’s decoder. It reconstructs the message from a lossy, incomplete signal. The goal is not to perfectly restore the original intent (often unknown), but to create a stable interpretation others can build on.

Information Loss and Deferred Modeling

Information theory helps frame one of the core challenges sculptors face: schema-later ingestion introduces entropy. When data is collected without structure, for example, through loosely typed APIs, CSV exports, or JSON logs, key semantic details are often lost or obscured.

Types become ambiguous. A value like “123” could be a string, a numeric ID, or an actual quantity. Relationships between fields are flattened or implied instead of enforced. Categorical meaning might exist but isn’t stated anywhere. Constraints like uniqueness, dependencies, or units are missing entirely.

This makes sculpting an error-correcting process. The modeler must infer intent by observing distributions, value ranges, temporal patterns and naming conventions. This maps directly to the idea of data as a compressed form of information. What the sculptor is reconstructing is the context that once gave it meaning.

Research in probabilistic modeling (e.g., Nazabal et al., 2020) reflects this dynamic, especially in ML contexts where missing or ambiguous data must be modeled with assumptions and distributions. Sculpting differs in that it makes these assumptions explicit and encodes them in transformation logic and documentation.

Modeling Patterns

In classical data modeling (e.g., Simsion & Witt, 2005), models are built before implementation. This is the “blueprint” approach: start with entities, design relationships, normalize and then build systems around that.

In the real-world lakehouse and ELT environments, this pattern has inverted. Data is ingested first, often semi-structured, often undocumented, and modeling happens after ingestion, as a form of progressive refinement. This aligns more closely with agile modeling or refactoring approaches from software engineering (Fowler, 1999), where structure is iteratively improved as understanding evolves.

Data sculpting leans into this inversion. It accepts the messy baseline as a given. It focuses on improving clarity without changing the underlying meaning of the data. It organizes transformation logic into modular, testable components. It favors readable and maintainable structure.

Importantly, sculpting often creates intermediate structures (staging layers, refined models, canonical views) that are stepping stones toward stability. These map conceptually to Fowler’s abstractions, structures that manage complexity without hiding logic.

Pragmatics and Organizational Semantics

The final theoretical strand comes from pragmatics, the study of how meaning is derived from context. In data terms, this means recognizing that the same value ("active", "closed", 42) can mean different things depending on the source system, the business domain, or the time period.

Data sculpting is contextual modeling. It asks “What did it mean to the person or system that created it?” And: “What should it mean to the person who’s going to use it?”

This is where sculpting aligns most with semantic layer design, in the broader sense of building translation layers between technical representations and business logic. The sculptor becomes a translator, fluent in both raw inputs and organizational semantics.

How Data Sculpting Actually Happens

Exploring & Decoding (RAW → REFINED)

You start with triage. Most raw tables are bloated. Two hundred columns, ten that matter. You skim column names, group by prefix or suffix, run quick counts on NULLs and uniques and narrow the field. You’re just trying to separate signal from junk.

Then comes the guesswork. You see a column called status with values like 1, 2, 4, 7. No documentation. You check value distributions, look at how they change over time, maybe join it to other tables. You might ask around, but often nobody really knows. So you build your own theory, test it and write it down. You’re decoding, trying to make meaning out of what barely qualifies as a signal.

Some tables don’t even tell you what they are. No primary key, no clear entity, no naming pattern. You poke around to figure out whether each row is an event, a user, a transaction, or just some broken artifact of another system. You hunt for fields that repeat or stay consistent. You follow your hunches. Eventually, the shape starts to emerge.

Types are usually wrong. Strings that should be numbers, booleans represented as “Y” or “1” or sometimes just an empty string. You cast, clean, recode and gradually push the data toward something structurally sane. It’s not glamorous but getting the types right is foundational. Everything else depends on it.

Refining & Documenting (REFINED → BUSINESS)

Deciding where to stop is part of the job. You don’t fix everything. You don’t fold all logic into a single mega-query. You isolate transforms into stages, make judgment calls about what belongs where and leave TODOs where things are still unclear. You treat ambiguity like debt: document it, contain it, but don’t ignore it.

Naming matters. A lot. You rename value1 to start_date once you realize what it means. You rewrite flag as is_active so nobody has to guess. You pick names that make structure obvious. Naming is modeling. Done well, it removes the need for explanation.

You chase edge cases. NULLs that mean two different things. Joins that fail silently. Timestamps that look fine until you realize half are in UTC and half in EEST. These aren’t rare exceptions, they’re the shape of the data. If you don’t surface them early, they’ll bite someone downstream.

You sketch models to learn. You write throwaway code, run LEFT JOINs to test assumptions, count rows over time to find data gaps or pipeline resets. These prototypes aren’t for production. They’re how you figure out what the data even is.

Code is memory. You don’t just write logic, you leave notes, questions, warnings. You write, not sure if this is always true because it won’t be obvious a month from now. You make it readable first, fast second. Because someone else will have to maintain it. Maybe that someone is you.

Exposing (INTERFACE)

And finally, once the form holds, you decide what to surface. You expose only what matters. You pick what fields make it into the final layer. You write descriptions. You label things clearly. You clean up to prevent confusion.

That’s the job. Just making the raw stuff usable, line by line, choice by choice, until someone else can look at it and say, “Yeah. That makes sense.”

Final Thought

What makes sculpting distinct is when and how it is applied: after the data is collected, when information is partial and structure is missing but still needed.

In that space, the sculptor works as a restorer, revealing the shape that was hidden and making it usable again.